Chip design is challenging

"Chips like GPUs require nearly 1,000 people to build, and each must understand how pieces of the design work together as they work to continuously improve them"

Bryan Catanzaro, VP of Applied Deep Learning Research, NVidia

Chip design is challenging, and the vast majority of that comes from functional and timing verification. Manufacturing a chip is slow and expensive, mistakes in the design are doubly so.

Correct by construction

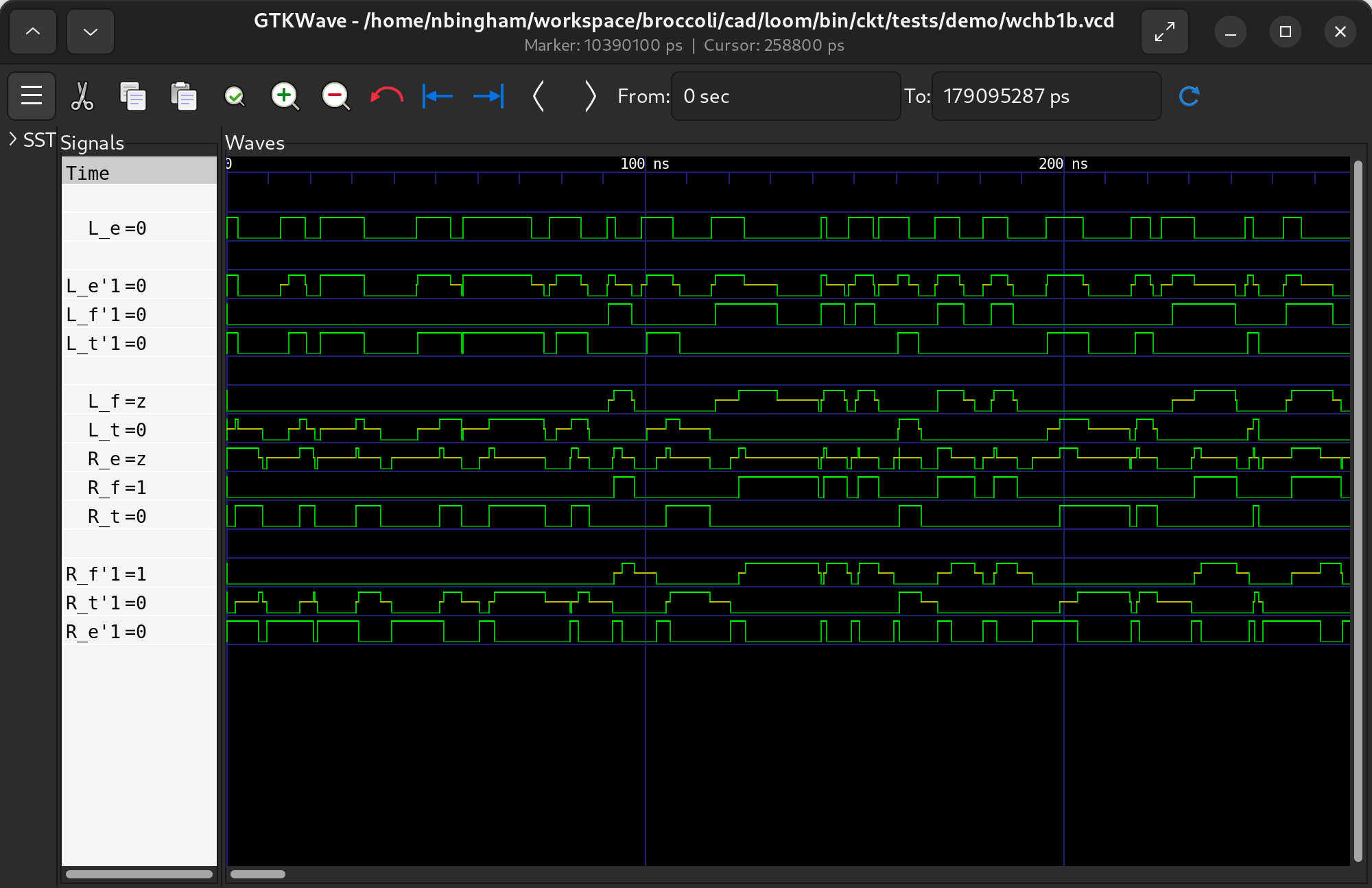

No timing required

Synthesize your design directly from your functional specification. Synthesis is correct by construction and generates circuits that function correctly regardless of timing. This dramatically reduces the need for both functional and timing verification allowing you to focus on your product.

Click and scroll to synthesize the circuit

Automating physical design

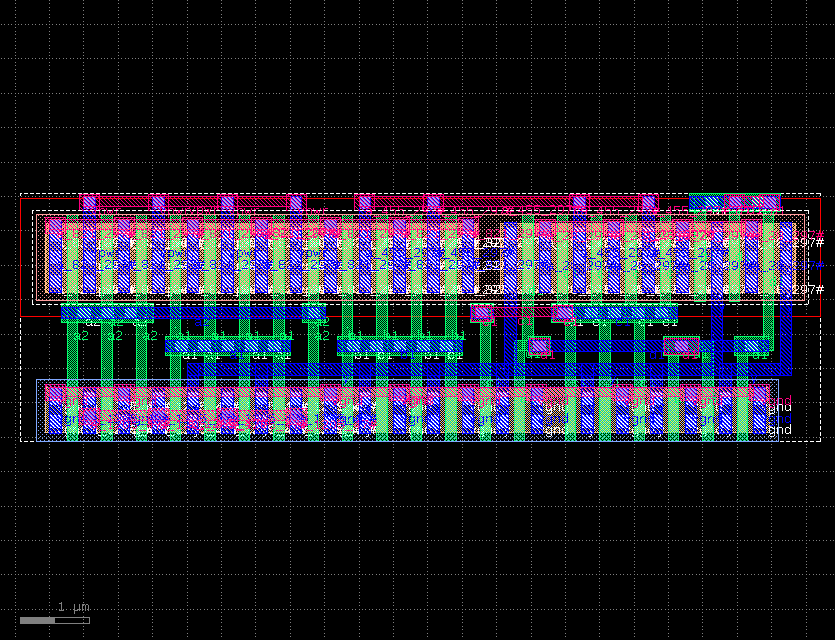

Cell layouts are generated automatically. Design rules are specified through a simple python interface. Only 14 minutes to layout all 2752 cells in the Skywater PDK. What would have taken 40 people 5 months to do manually has been reduced to a coffee break.

See the spec for Skywater 130nm.

Click to see the commands that generated these cells

Get started with Loom

Version 0.13.0 (pre-alpha) is available for Linux, Windows, and macOS.

curl -sL https://raw.githubusercontent.com/broccolimicro/loom/refs/heads/main/install.sh | sudo bash

Support the Development of Loom

Become a sponsor and help us improve and maintain Loom. Your support is greatly appreciated.

Individual

$10 / month

Support the development of Loom and receive exclusive updates.

Become a SponsorOrganization

$2,000 / month

Support the development of Loom. Your bug tickets are prioritized, you have direct access to the team, and you may display your logo prominently on this site.

Become a SponsorEnterprise

$10,000 / month

Support the development of Loom. Your feature requests are prioritized, and you are given an advisory position in which you may guide development with bi-weekly 1:1s. You are allocated half an engineer for every multiple of this sponsorship. Your bug tickets are prioritized, you have direct access to the team, and you may display your logo prominently on this site.

Become a SponsorBroccoli was founded in December of 2021 to bring about a categorical improvement in compute performance, and we believe that asynchronous design is the next step. With asynchronous design, it is possible to implement complex control that takes advantage of pareto rules in the workload to dramatically improve performance and power. However, asynchronous design has proven to be far too difficult for commercial viability. Our first step toward achieving our mission is to remove that blocker with Loom.